The AI Regulation Race

Can AI ever be truly safe?

As AI’s influence explodes in healthcare, finance, and beyond, regulations are being actively shaped with the aim of trustworthiness.

While the vast array of AI applications makes an easy safety measure impossible, the National Institute of Science and Technology (NIST) is leading the charge in ensuring safe and trustworthy AI.

In the AI regulation race, new developments are occurring daily. On April 29th, 2024, NIST released four AI documents, 180 following a US executive order on safe AI development. These documents help developers manage risks in AI, secure software development, and address issues with synthetic content. They’re also working on global AI standards. This blog dives into the ongoing effort to regulate AI.

NIST AI Resource Roadmap

This diagram from the NIST AI resource center website outlines a roadmap for improving the AI Risk Management Framework (AI RMF). This roadmap suggests ways for NIST and other organizations (public or private) to collaborate and develop the AI RMF further. The focus is on filling knowledge gaps and creating best practices for trustworthy AI. As AI technology advances, the roadmap itself will adapt.

This diagram from the NIST AI resource center website outlines a roadmap for improving the AI Risk Management Framework (AI RMF). This roadmap suggests ways for NIST and other organizations (public or private) to collaborate and develop the AI RMF further. The focus is on filling knowledge gaps and creating best practices for trustworthy AI. As AI technology advances, the roadmap itself will adapt.

Among these efforts is the Artificial Intelligence Safety Institute Consortium (AISIC). As written in the Consortium agreement, the purpose is to “. . . establish a new measurement science that will enable the identification of proven, scalable, and interoperable measurements and methodologies to promote safe and trustworthy development and use of Artificial Intelligence (AI), particularly for the most advanced AI.”

This article discusses the Consortium, as well as the Blueprint for an AI Bill of Rights. We’ll focus on the US approach to AI regulation, but also include the EU and China for a reason: these three regions are major players in the development and regulation of AI. Their approaches can inform each other and the broader global conversation around AI governance.

The US is a leader in AI research and development, and its regulatory decisions will have a significant impact on the global AI landscape.

The EU has taken a proactive approach to AI regulation, with the GDPR being a prime example. Their approach emphasizes ethics and data privacy.

China is another major player in AI development, and its approach to regulation is likely to be shaped by its unique political and economic context.

By comparing the approaches of these three regions, the article can provide a more comprehensive picture of AI governance on the global scale.

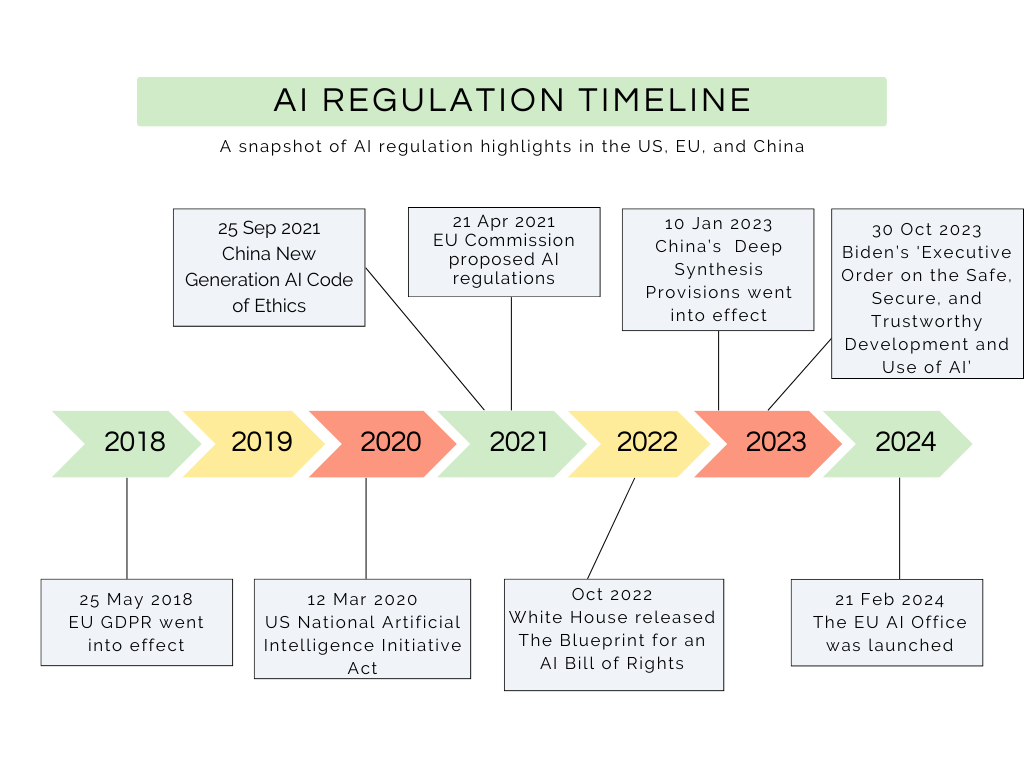

This timeline gives a few of the highlights from the major world actors in AI regulation, starting with the General Data Protection Regulation (GDPR) in the EU in 2018. The GDPR is a European law that gives people in the EU control over their personal information. It requires companies to be transparent about how they use data and gives individuals rights to access, correct, or erase their data. The GDPR influenced regulations on data protection throughout the world, and a similar trend is anticipated with AI regulation.

The prevailing approach to AI governance around the world has been to establish national strategies or ethics policies before enacting legislation. This has absolutely been true in the United States.

In 2020, the US National AI Initiative Act was introduced. The National Artificial Intelligence Initiative Act of 2020 created a national program to advance AI research, education, and development. It established various committees and offices to oversee the initiative, conducted research on AI’s impact, and developed standards for AI systems.

Concerns about responsible AI development gained momentum in 2021 with China and the EU proposing regulations.

On April 21st, 2021, the European Commission, which is the governing body of the European Union (EU), released a proposal for a new law. This law aimed to regulate the development and use of artificial intelligence (AI) technologies within the EU. The purpose of this proposal was to make sure AI is used safely and ethically throughout the European Union.

In September 2021 a Chinese government committee issued “Ethical Norms for New Generation Artificial Intelligence.” These norms aimed to establish ethical guidelines for using AI in China. They addressed data privacy, human control over AI systems, and preventing monopolies in the AI sector. However, the document lacked specifics on enforcement mechanisms and penalties for non-compliance.

In October 2022 in the US, the White House released the Blueprint for an AI Bill of Rights, discussed in more detail below. In early 2023, we saw further development with China’s Deep Synthesis Provisions, aiming to control deepfakes. This will drastically alter how AI creates content for its massive 1.4 billion population due to the law’s wide-reaching impact.

2023 also brought President Biden’s Executive Order on the Safe, Secure, and Trustworthy Development and Use of AI. This new government rule aims to make sure AI is developed and used safely and securely. It also protects people’s privacy, fights discrimination, and helps both businesses and workers. The rule encourages innovation and competition in the US, making it a leader in AI technology.

Most recently, in February 2024, the European Union solidified its commitment with the launch of a dedicated AI office. The EU has taken massive strides with the EU AI Act. The EU AI Act is the world’s first major law regulating AI. It classifies AI applications into risk categories: high-risk applications (like job applicant ranking tools) face strict rules, while minimal-risk uses (like video games) are unregulated. The Act aims to ensure safe and ethical development of AI in the EU.

The US has aligned with the EU on this, and new developments are occurring daily as the race to AI regulation trudges on. Now that we have seen a brief history of the last few years of notable AI regulations, let’s look at one example of recommendations from the US Blueprint for an AI Bill of Rights.

The Blueprint for an AI Bill of Rights

Setting the tone for the NIST working grounds, we can take a look at the AI Bill of Rights as a blueprint for current and future efforts. As you review this section, think about how the principles could be turned into laws and regulations, and how this will function in practice.

There are many examples of advancements in technology and data collection which are threatening fairness and fundamental rights, including unequal loan algorithms, unsafe medical AI, and unchecked social media data collection, not to mention AI’s threat to democracy.

While beneficial, AI tools restrict access to resources, perpetuate bias, and violate privacy. The AI Bill of Rights outlines principles to safeguard people from harmful AI and promote its ethical use. This framework, informed by public experiences and expert input, offers a guide for policymakers and developers to ensure AI protects our rights and values.

Here are the five principles of the AI Bill of Rights:

Safe and Effective Systems

The AI Bill of Rights aims to protect you from unsafe AI and biased data use. Developers should involve experts and the public to identify risks and ensure AI is designed and tested to be safe, effective, and fair.

Algorithmic Discrimination Protections

The AI Bill of Rights fights against algorithmic discrimination. It ensures AI is designed and tested to be fair and unbiased, protecting people from unfair treatment based on various characteristics.

Data Privacy

The AI Bill of Rights protects your data privacy. It gives you control over how your data is collected and used, and restricts its use in sensitive areas like health and finance. You should also be free from excessive surveillance.

Notice and Explanation

The AI Bill of Rights ensures transparency in AI use. You deserve to know when an automated system is making decisions that affect you, and how it arrives at those choices.

Human Alternatives, Consideration, and Fallback

You should have control over your AI interactions. You should be able to opt-out of AI systems and have access to a human when you run into problems and in sensitive domains like criminal justice, health, employment, and education.

Overall, the AI Bill of Rights serves as a critical framework for ensuring the responsible development and deployment of automated systems. By outlining core principles like fairness, transparency, and accountability, it aims to safeguard individuals and communities from potential harms associated with AI. This framework fosters a future where AI strengthens democratic values by promoting equal opportunity, protecting civil liberties, and ensuring access to essential resources. The AI Bill of Rights empowers us to harness the power of AI innovation while safeguarding the fundamental rights that underpin a just and thriving society. How do we address the vast gap between intangible statements of intent and tangible, enforceable legislation? That is where the AISIC comes in.

In a February 7th, 2024 announcement, the U.S. Secretary of Commerce, Gina Raimondo, unveiled the leadership team responsible for guiding the forthcoming U.S. AI Safety Institute (USAISI) housed within the NIST. This is following the AI Bill of Rights and President Biden’s executive order on the safe, secure, and trustworthy development and use of AI.

The Artificial Intelligence Safety Institute Consortium (AISIC)

The US Artificial Intelligence Safety Institute (USAISI) is part of the National Institute of Standards and Technology (NIST). It’s tasked with creating instructions, testing AI systems, and researching how to achieve the goals outlined in President Biden’s Executive Order on AI. The AISIC initiative, spearheaded by the NIST, seeks to foster the development and deployment of trustworthy and safe AI. The U.S. AI Safety Institute Consortium (AISIC) will convene a broad range of participants, encompassing AI developers and users, academic researchers, government and industry representatives, and civil society organizations.

The AISIC is leading the charge for secure AI development. I’m fortunate to work on this critical issue at Accel AI Institute, a member of the consortium.

Accel AI Institute contributes to the Consortium’s efforts along with over two hundred stakeholders through strategic advice, resource sharing, and consulting on ethical AI development for all members. Our focus areas include bias mitigation, explainable AI, and ethical development practices. Additionally, Accel AI Institute’s LatinX in AI initiative promotes diversity and inclusion within the field of AI.

This Consortium will leverage the expertise of leading corporations and innovative startups alongside academic and civil society thought leaders who are shaping our understanding of AI’s societal impact. Membership includes the nation’s largest enterprises, cutting-edge AI system and hardware creators, key figures from academia and the general public, and representatives from professions heavily reliant on AI.

Additionally, state and local governments, along with non-profit organizations such as Accel AI are part of this collaborative effort. The consortium’s focus extends beyond the United States, aiming to collaborate with international organizations to establish interoperable and effective safety standards for AI on a global scale.

Within the AISIC are several working groups, which can shift and develop as collaboration ensues. The working groups include the following: Risk Management for Generative AI, Synthetic Content, Capability Evaluations, Red-Teaming, and Safety & Security. These working groups have now been broken down into task force teams to complete the research and development that has been identified.

Although I cannot yet share specifics on research and implementation from these working groups until we publish our findings, I can provide some basic definitions of what the working groups are covering:

Generative AI can create incredibly realistic and believable content, but this poses risks. Malicious actors can use it to spread misinformation, create deepfakes to damage reputations, or generate biased content that amplifies societal inequalities. Even with good intentions, generated content can be unintentionally biased or misleading, reflecting the data used to train the AI. This can perpetuate societal inequalities or simply create confusion due to the difficulty of distinguishing real from artificial content.

Synthetic content in AI refers to computer-generated data that mimics real-world content. This can include realistic-looking images, videos, or even text that’s indistinguishable from human-created content. It’s often used for training AI models, but also raises concerns about deepfakes, misinformation, and potential copyright issues. Synthetic content is another way of explaining misinformation, and what is called hallucination or fabrication. Misinformation can have huge consequences, such as swaying elections. This is especially important as billions of people are going to the polls this year.

Capability evaluations of AI test how well a system performs its tasks, assessing accuracy, robustness, fairness, and explainability to ensure its reliability and trustworthiness. Imagine a company developing an AI loan system. To ensure a trustworthy system, they test it beyond just accuracy. They see if it treats everyone fairly regardless of background, can handle unusual finances, and explains its loan decisions clearly. This helps reduce bias and promotes fair lending practices.

Red-teaming for AI involves simulating attacks on AI models to identify vulnerabilities and weaknesses, aiming to improve their safety and prevent them from being misused. Imagine researchers are developing a powerful generative AI that can write different creative text formats, like poems or news articles. To test its security, they might “red team” it by feeding the AI prompts designed to generate harmful content. For example, they might see if the AI can be tricked into writing hateful slogans or create fake news articles that are incredibly convincing but completely untrue. By identifying these vulnerabilities, the researchers can improve the safeguards in the gen-AI and prevent it from being misused to generate malicious content.

Safety and Security can be defined separately:

Safety: Defined by NIST is “freedom from conditions that can cause death, injury, occupational illness, damage to or loss of equipment or property, or damage to the environment”

Security: Focused on information systems and data, NIST’s Computer Security Resource Center glossary defines it as “the protection of information systems from unauthorized access, use, disclosure, disruption, modification, or destruction”.

NIST’s AI Safety Institute, which is supported by the AISIC, has three main goals:

Collaboration: Establish a long-term, open consortium where researchers, industry, and the public can work together on trustworthy AI measurement science.

Industry Standards: Develop tools and best practices to help industries create safe, secure, and reliable AI systems.

Risk Assessment: Create methods for identifying and evaluating potential harms caused by AI systems.

This is all in an effort to “. . . ready the U.S. to address the capabilities of the next generation of AI models or systems, from frontier models to new applications and approaches, with appropriate risk management strategies.”(NIST, 2024)

All in all, the Consortium is an important and necessary step in steering a good path forward for AI oversight. However, I anticipate a lot of challenges and needs that we cannot yet anticipate.

This image was generated by https://runwayml.com/ai-tools/text-to-image/ with a prompt to make an image of a futuristic train with unfinished tracks.

Imagine AI as a high-speed train with the potential to revolutionize transportation (solving complex problems, improving efficiency). However, unlike established railways, the tracks for AI are still under construction (AI is a developing field). Unforeseen obstacles (ethical issues, technical limitations) could derail the train (cause serious problems) despite good intentions. The responsibility lies with the engineers (developers, ethicists, policy makers) to carefully lay the tracks, ensuring they are safe, well-maintained, and lead to the desired destination (ethical and beneficial applications).

Conclusion: Balancing Progress and Responsibility with AI

While AI offers immense potential to revolutionize various aspects of our lives, navigating its development requires careful consideration. Like a high-speed train on incomplete tracks, AI is venturing into uncharted territory. The decisions and recommendations made by those working within the AISIC and others are crucial for laying a safe and ethical foundation for this powerful technology.

Implementing these recommendations on a global scale presents a challenge. AI’s rapid growth and global reach make regulation and monitoring complex. Here, fostering international collaboration and knowledge sharing becomes essential.

Furthermore, true “safe” and “trustworthy” AI goes beyond technical considerations. We must address the ethical implications — from data bias and neocolonial appropriation to environmental concerns related to data centers. Focusing solely on Hollywood-style AI threats overshadows the more insidious issues of social inequities and power imbalances that AI can perpetuate.

The path forward lies in prioritizing human well-being and environmental sustainability. Initiatives like the AI Bill of Rights and the AISIC offer a valuable framework for developing AI guided by ethics and human values. By holding developers and regulators accountable and amplifying the voices of those advocating for people and the planet, we can ensure that AI fulfills its potential for positive change.