Reinforcement Learning as a Methodology for Teaching AI Ethics

One of the biggest questions when considering ethics for artificial intelligence (AI) is how to implement something so complex and un-agreed-upon into machines that are contrastingly good at precision. Some say this is impossible. “Ethics is not a technical enterprise, there are no calculations or rules of thumb that we could rely on to be ethical. Strictly speaking, an ethical algorithm is a contradiction in terms.” (Vachnadze, 2021)

Dismissing ethics training for AI because it presents many technical challenges is not going to help our society when the technology is advancing regardless and ethical concerns are continuing to arise.

So, we turn to the bottom-up approach of reinforcement learning as a promising avenue to explore how to move forward towards AI for positive social impact.

What is Reinforcement Learning and Where Does it Originate?

“Reinforcement Learning (RL) is a type of machine learning technique that enables an agent to learn in an interactive environment by trial and error using feedback from its own actions and experiences.” (Bhatt, 2018)

Reinforcement learning is different from other forms of learning that rely on top-down rules. Rather, this system learns as it goes, making many mistakes but learning from them, and adapting through sensing the environment. It is trained in a simulated environment using reward systems, with either positive or negative feedback, so the agent can try lots of different actions within its environment with no real-world consequence until it gets it right.

We see RL commonly used in training algorithms to play games, such as Alpha Go and chess. In the beginning, RL was studied in animals, as well as early computers.

“The history of reinforcement learning has two main threads, both long and rich, that were pursued independently before intertwining in modern reinforcement learning. One thread concerns learning by trial and error that started in the psychology of animal learning. This thread runs through some of the earliest work in artificial intelligence and led to the revival of reinforcement learning in the early 1980s. The other thread concerns the problem of optimal controland its solution using value functions and dynamic programming. For the most part, this thread did not involve learning. Although the two threads have been largely independent, the exceptions revolve around a third, less distinct thread concerning temporal-difference methods. . . All three threads came together in the late 1980s to produce the modern field of reinforcement learning,” (Sutton and Barto, 2015)

It is interesting to note that this form of learning originated partially in the training of animals, and is often used for teaching human children as well. It is something that has been in existence and evolving for many decades.

How is reinforcement learning used for machine learning?

Now let’s explore more of this bottom-up approach to programming and how it functions for artificial intelligence. “Instead of explicit rules of operation, RL uses a goal-oriented approach where a ‘rule’ would emerge as a temporary side-effect of an effectively resolved problem. That very same rule could be discarded at any moment later on, where it proves no longer effective. The point of RL modeling is to help the A.I. mimic a living organism as much as possible, thereby compensating for what we commonly held to be the main draw-back of Machine-Learning: The impossibility of Machine-Training, which is precisely what RL is supposed to be.” (Vachnadze, 2021)

This style of learning that throws the rule book out the window could be promising for something like ethics, where the rules are not overly consistent or even agreed upon. Ethics is more situation-dependent, therefore teaching a broad rule is not always sufficient. Could RL be the answer?

“Reinforcement learning problems involve learning what to do — how to map situations to actions — so as to maximize a numerical reward signal. . . These three characteristics — being closed-loop in an essential way, not having direct instructions as to what actions to take, and where the consequences of actions, including reward signals, play out over extended time periods — are the three most important distinguishing features of reinforcement learning problems.” (Sutton and Barto, 2015)

Turning ethics into numerical rewards can pose many challenges but may be a hopeful consideration for programming ethics into AI systems. The authors go on to say that “. . . the basic idea is simply to capture the most important aspects of the real problem facing a learning agent interacting with its environment to achieve a goal. Clearly, such an agent must be able to sense the state of the environment to some extent and must be able to take actions that affect the state.” (Sutton and Barto, 2015)

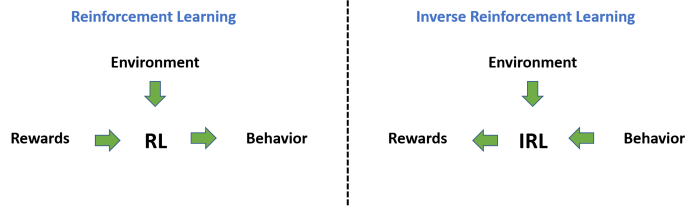

There are many types of machine learning, and it may be promising to look at using more than one type in conjunction with RL in order to approach the ethics question. One paper used RL along with inverse reinforcement learning (IRL). IRL learns from human behaviors but is limited to learning what people do online, so it only gets a partial picture of actual human behavior. Still, this in combination with RL might cover some blind spots and is worth testing out.

source:

https://towardsdatascience.com/inverse-reinforcement-learning-6453b7cdc90d

Can reinforcement learning be methodized in ethics for AI?

One of the ways that RL can work in an ethical sense, and avoid pitfalls, is by utilizing systems that keep a human in the loop. “Interactive learningconstitutes a complementary approach that aims at overcoming these limitations by involving a human teacher in the learning process.” (Najar and Chetouani, 2021)

Keeping a human in the loop is critical for many issues, including those around transparency. The human can be thought of as a teacher or trainer, however, an alternative way to bring in a human agent is as a critic.

“Actor-Critic architectures constitute a hybrid approach between value-based and policy-gradient methods by computing both the policy (the actor) and a value function (the critic) (Barto et al., 1983). The actor can be represented as a parameterized softmax distribution. . . The critic computes a value function that is used for evaluating the actor.” (Najar and Chetouani, 2021)

Furthermore, I like the approach of moral uncertainty because there isn’t ever one answer or solution to an ethical question, and to admit uncertainty leaves it open to continued questioning that can lead us to the answers that may be complex and decentralized. This path could possibly create a system that can adapt to meet the ethical considerations of everyone involved.

“While ethical agents could be trained by rewarding correct behavior under a specific moral theory (e.g. utilitarianism), there remains widespread disagreement about the nature of morality. Acknowledging such disagreement, recent work in moral philosophy proposes that ethical behavior requires acting under moral uncertainty, i.e. to take into account when acting that one’s credence is split across several plausible ethical theories,” (Ecoffet and Lehman,)

Moral uncertainty needs to be considered, purely because ethics is an area of vast uncertainty, and is not an answerable math problem with predictable results.

There are plentiful limitations, and many important considerations to attend to along the way. There is no easy answer to this, rather there are many answers that depend on a lot of factors. Could an RL program eventually learn how to compute all the different ethical possibilities?

“The fundamental purpose of these systems is to carry out actions so as to improve the lives of the inhabitants of our planet. It is essential, then, that these agents make decisions that take into account the desires, goals, and preferences of other people in the world while simultaneously learning about those preferences.” (Abel et. al, 2016)

The Limitations of Reinforcement Learning for Ethical AI

There are many limitations to consider, and some would say that reinforcement learning is not a plausible answer for an ethical AI.

“In his recent book Superintelligence, Bostrom (2014) argues against the prospect of using reinforcement learning as the basis for an ethical artificial agent. His primary claim is that an intelligent enough agent acting so as to maximize reward in the real world would effectively cheat by modifying its reward signal in a way that trivially maximizes reward. However, this argument only applies to a very specific form of reinforcement learning: one in which the agent does not know the reward function and whose goal is instead to maximize the observation of reward events.” (Abel et. al, 2016)

This may take a lot of experimentation. It is important to know the limitations, while also remaining open to being surprised. We worry a lot about the unknowns of AI: Will it truly align with our values? Only through experimentation can we find out.

“What we should perhaps consider is exploring the concept of providing a ‘safe learning environment’ for the RL System in which it can learn, where models of other systems and the interactions with the environment are simulated so that no harm can be caused to humans, assets, or the environment. . . However, this is often complicated by issues around the gap between the simulated and actual environments, including issues related to different societal/human values.” (Bragg and Habli, 2018)

It is certainly a challenge to then take these experiments from their virtual environments and utilize them in the real world, and many think this isn’t achievable.

“In the real world, full state awareness is impossible, especially when the desires, beliefs, and other cognitive content of people is a critical component of the decision-making process.” (Abel et. al, 2016)

What do I think, as an anthropologist? I look around and see that we are in a time of great social change, on many fronts. Ethical AI is not only possible, it is an absolute necessity. It is worth exploring reinforcement learning and other hybrid models that include RL, most definitely. The focus on rewards is a bit troubling to me, as the goal of a ‘reward’ is not always the most ethical. There is much in the terminology that troubles me, however, I don’t think that AI is inherently doomed. It is not going anywhere, so we need to work together to make it ethical.

You can stay up to date with Accel.AI; workshops, research, and social impact initiatives through our website, mailing list, meetup group, Twitter, and Facebook.

Join us in driving #AI for #SocialImpact initiatives around the world!

If you enjoyed reading this, you could contribute good vibes (and help more people discover this post and our community) by hitting the 👏 below — it means a lot!

Citations

Abel, D., MacGlashan, J., & Littman, M. L. (2016). Reinforcement Learning as a Framework for Ethical Decision Making. aaai.org. Retrieved December 20, 2021, from https://www.aaai.org/ocs/index.php/WS/AAAIW16/paper/viewFile/12582/12346

Bhatt, S. (2019, April 19). Reinforcement learning 101. Medium. Retrieved December 20, 2021, from https://towardsdatascience.com/reinforcement-learning-101-e24b50e1d292

Bragg, J., & Habli, I. (2018). What is acceptably safe for reinforcement learning? whiterose.ac.uk. Retrieved December 20, 2021, from https://eprints.whiterose.ac.uk/133489/1/RL_paper_5.pdf

Gonfalonieri, A. (2018, December 31). Inverse reinforcement learning. Medium. Retrieved December 20, 2021, from https://towardsdatascience.com/inverse-reinforcement-learning-6453b7cdc90d

Ecoffet, A., & Lehman , J. (2021). Reinforcement learning under moral uncertainty . arxiv.org. Retrieved December 20, 2021, from https://arxiv.org/pdf/2006.04734v3.pdf

Najar, A., & Chetouani, M. (2021, January 1). Reinforcement learning with human advice: A survey. Frontiers. Retrieved December 20, 2021, from https://www.frontiersin.org/articles/10.3389/frobt.2021.584075/full

Noothigattu, R., Bouneffouf, , D., Mattei, N., Chandra, R., Madan, P., Varshney, K. R., Campbell, M., Singh, M., & Rossi, F. (2020). Teaching AI Agents Ethical Values Using Reinforcement Learning and Policy Orchestration. Ritesh Noothigattu — Publications. Retrieved December 20, 2021, from https://www.cs.cmu.edu/~rnoothig/publications.html

Sutton , R. S., & Barto, A. G. (2015). Reinforcement learning: An introduction — stanford university. http://web.stanford.edu/. Retrieved December 20, 2021, from http://web.stanford.edu/class/psych209/Readings/SuttonBartoIPRLBook2ndEd.pdf

Vachnadze, G. (2021, February 7). Reinforcement learning: Bottom-up programming for ethical machines. Marten Kaas. Medium. Retrieved December 20, 2021, from https://medium.com/nerd-for-tech/reinforcement-learning-bottom-up-programming-for-ethical-machines-marten-kaas-ca383612c778